I’ve now lost an SD card inside my optical drive entirely. I now need to pull this lappy apart and find a way to fish it out. I guess it was only a matter of time..

Presentation to KWLUG on i3 and Tiling Window Managers – January 7, 2019

I recently made a presentation to the Kitchener-Waterloo Linux User Group on the topic of i3 and tiling window managers. The folks involved were kind enough to record the audio feed and have made it freely available through archive.org.

If you’re interested, you can check out my presentation (as well as audio feeds for nearly 60 past meetings) at the following link.

My Experience With LPIC-1 Certification

I recently completed the LPIC-1 certification offered by the Linux Professional Institute, which tests candidates on Linux internals and system administration.

LPIC-1 certification is broken down into two exams: 101-400 and 102-400. 101-400 covers topics such as Linux system architecture, installation, package management, devices, and filesystems. Conversely, 102-400 explores shell scripting, X11/GDM, service management, basic network configuration, and security concepts such as user and group permissions. The objectives emphasize the location of crucial system and configuration files, while also delving heavily into command line utilities and their respective options and switches. There’s also a focus on the use of vi as a text editor, as well as some rudimentary exploration of SQL using MariaDB, which I thought was a good general-purpose addition for any aspiring sysadmin.

As the certification is vendor-agnostic, the course objectives cover both Red Hat and Debian derivatives, including their respective package managers and distribution-specific utilities. At times, this became a bit overwhelming, but I understood the need for a prospective Linux sysadmin to work with both alternatives due to their ubiquity and market share. A little more puzzling was an equal focus on both System V and systemd init systems, which feels less essential in the present day. Despite its detractors, systemd has taken hold as a standard in the Linux community, and I wouldn’t be shocked to see System V init abandoned entirely in future versions of the certification.

I was disappointed when I discovered that the exam questions are all multiple-choice. While rote knowledge of the commands and concepts is impressive, the lack of simulation-based content may turn off prospective employers that seek a more practical test of a candidate’s technical knowledge. Many certs have been devalued by cheating and freely available online “brain-dumps”, and I doubt these exams are any exception to the rule.

Speaking as a casual Linux user since the mid-1990s, I was shocked by how unfamiliar the content felt. There’s a particular emphasis on system administration and management that the average user typically won’t touch in the vast majority of cases. If anything, I think this speaks to the ease of use of most modern Linux distros; for instance, most home users don’t have to consider their hard drive’s partition layout during installation, nor do they have to toil on the command line to configure their system when graphical desktop environments such as GNOME lay the options out in a user-friendly manner and provide all the necessary buttons and sliders.

My study materials were a combination of the LPIC-1 video course available at www.linuxacademy.com, the course objectives from the LPI website, and a selection of “how-tos” gleaned from various websites. It’s important to stress the use of multiple information sources in combination – given the breadth and depth of the exam objectives, I don’t think any one source would have helped me pass the exams on its own.

Drawbacks aside, I feel the certification is still worth the time and money (~$400 US, with various vouchers and discounts available to offset the cost). The knowledge I gained as a relative novice was a good return-on-investment and would serve as a good stepping stone to a more intensive and practical certification such as those offered by Red Hat. As such, I’d recommend LPIC-1 to anyone seeking a certificate reflecting a vendor-agnostic approach to Linux system administration.

Folding@Home and Distributed Computing

Folding@Home is an open-source distributed computing project launched by Stanford University professor Vijay Pande in October 2000. It aids in disease research by simulating the myriad ways in which proteins “fold” or assemble themselves to perform some basic function. Though protein folding is an essential biological process, mis-folding can lead to diseases such as Parkinson’s, Huntington’s, and Alzheimer’s. Consequently, the examination of folding models can help scientists understand how these diseases develop and assist in designing drugs to combat their effects. As of March 2018, 160 peer-reviewed papers have been published based on results obtained from Folding@Home simulations.

Distributed computing describes the method of a larger task being broken down into portions and shared across multiple computers. In the context of Folding@Home, client PCs download a “work unit” from the project’s work servers, perform the computational work needed to model the protein’s folding, then re-upload the results to a server when complete. The workload behind this folding is significant, and there may be a large number of work units involved in one specific model.

The Folding@Home client software is installed on a user’s PC and is commonly configured to sit idle until the PC is left unattended for a period of several minutes, similar to how one would use a desktop screensaver. The software then consumes idle CPU and GPU resources to perform its task (this is also configurable, as pushing CPU and GPU usage also increases energy consumption and results in excess heat generation). Several computing platforms are supported; beyond the commonly available Windows, OS X, and Linux clients, versions of the software have also been developed for the Sony PlayStation 3 and Android mobile devices.

Though distributed computing models are extremely common in a business/scientific context (weather modelling, graphic rendering, and cryptocurrency mining all share a similar approach), most of these examples rely on a centralized cluster of computers owned by a single company or research organization, often benefitting the financial interests of the singular organization rather than accomplishing a public good.

In contrast, Folding@Home is part of a smaller subset of “volunteer computing” projects intended to reach its goal through harnessing the computational resources of hobbyists. SETI@Home is arguably the most well-known of these projects and is devoted to the search for extraterrestrial life based on the analysis of radio signals. Enigma@Home has assisted in decoding previously-unbroken messages encrypted by the German Enigma machines during the Second World War. The European research organization CERN also threw its own hat in the ring by offloading portions of its research around the Large Hadron Collider to volunteer computing enthusiasts.

Such projects are not always benevolent: distributed and volunteer computing can also be used as a destructive force. Botnets and distributed denial-of-service (DDoS) attacks are two common examples of this phenomenon. The LOIC (Low Orbit Ion Cannon) is a notable DDoS application used by the Anonymous group over the past decade to deny public access to websites they deemed objectionable.

I’ve personally been lending my resources to Folding@Home since the summer of 2010 and recently reached the top 1% of nearly 2 million contributors. Beyond the obvious philanthropic qualities of the project, it’s also taught me a great deal about a variety of computer science concepts. Hardware selection, performance tuning and performance monitoring all play an important part in optimizing a folding cluster, making Folding@Home is a great starter point for aspiring home labbers and sysadmins.

You can find out more about Folding@Home here – http://folding.stanford.edu/

Design Flaw

The Dell Precision M4700 laptop, while generally dependable, has a woeful quirk in its construction that’s caused a good share of frustration for yours truly.

The Mini-SD card slot is located on the left side of the laptop, situated just millimetres above the slot-loading optical combo drive.

Many a time, I’ve grabbed a card off my desk, blindly reached around the side of the chassis, and inadvertently popped the card right into that slot-loading drive.

Crap. Reach for a paperclip, or a credit card or a key or something like that. Hope that it doesn’t get hauled into the drive as well, while we’re at it.

It’s probably happened a half-dozen times in the last year.

I mean, this wouldn’t be a problem if I didn’t keep making the same mistake over and over.

What’s that whole saying about a chain only being as strong as its weakest link?

Documentary Review – Viva Amiga: The Story of a Beautiful Machine (2017)

Viva Amiga: The Story of a Beautiful Machine is a 2017 documentary by director/producer Zach Weddington detailing the history of Amiga Inc. and their eponymous line of home computers. The company’s trajectory is charted from its beginnings in the early 1980s, through its acquisition by Commodore International and launch of the Amiga 1000 in 1985, into the platform’s demise in the mid-1990s. Weddington crowd-funded the project from a 2011 Kickstarter campaign, culling interviews from former Amiga and Commodore employees including engineers Bil Herd and Dave Haynie, software developer Andy Finkel, and Amiga 500 hardware mastermind Jeff Porter.

Considerable care is taken to properly frame the Amiga’s story within the context of the 1980s home computer market, which was substantially more heterogenous in terms of brands and hardware versus its modern counterpart. Though the Amiga was best positioned to compete with the features and target audience of the Apple Macintosh, the home-computing scene was also saturated with offerings from big-business monolith IBM, Atari (headed up by Commodore ex-pat Jack Tramiel), and Tandy/Radio Shack. The Amiga unfortunately also competed with Commodore’s own C128, which it had marketed in parallel as a more cost-effective alternative, undercutting the Amiga’s adoption.

A large portion of Viva Amiga charts the development of the Amiga 1000 under the direction of company founder Jay Miner, who demonstrated his faith in the project by taking out a second mortgage to help finance its production. Footage of the 1985 launch event is included and does a superb job of illustrating the excitement and novelty surrounding the A1000 at the time. Other elements of the launch reflect current tech industry tropes; guest appearances (insert Andy Warhol here), shaky software demos, and the promise of cutting-edge products with little or no actual stock available.

Pause for personal reflection: I first laid hands on a hand-me-down Amiga 500 in 1994, accompanied by the requisite stack of cracked-and-duplicated floppy disks. Most of my hours were spent playing games like Wings and Life and Death, but I also remember being intrigued by the skeumorphic approach of the Amiga Workbench. Having never seen a Mac or Atari ST, I was shocked by the intuitiveness of this GUI and relation to a real-world working environment, especially when taking the software’s age into account. Next to the Amiga 500 sat an IBM PC clone running DOS 6.22 and Windows 3.1. Windows had desktop icons and program groups; Workbench had file drawers, folders, and a recycle bin.

With this point in mind, the documentary takes a compelling turn when examining the Amiga’s role in digital content creation, breaching the subject of computing for its own sake versus computing as a means to an end. With the Amiga’s simple, effective user interface and increased graphics and sound capabilities relative to those of its predecessors, an argument is made for the platform as a pioneering media production tool, eliminating a layer of abstraction between the operator and computer and allowing users to seamlessly explore traditionally non-digital creative fields such as animation, music composition, and visual art (the latter of these no doubt spurred by the release of a non-copy-protected version of Deluxe Paint). The massive popularity of NewTek’s Video Toaster is treated with the appropriate level of gravitas, while the documentary’s commentators also point to the platform’s foothold in the CGI and 3D modeling fields.

The Amiga’s eventual downfall is almost universally attributed to Commodore’s miserable approach to marketing and odd placement in the retail sphere, issues which were compounded following the ouster of COO Thomas Rattigan in 1987. Commodore’s fate is sealed by misguided projects such as the Amiga CD32 and CDTV, segueing into a two-pronged epilogue; the failure of larger commercial ventures based around the Amiga’s intellectual property, as well as an overview of the homebrew “Amiga-in-name” hardware and software released by enthusiasts throughout the rest of the 1990s into the present day.

Viva Amiga best serves as an entry point to the Amiga ouevre for budding retrocomputing enthusiasts or tech historians, while offering a hefty dose of nostalgia and fuzzy-feelings to veterans and die-hards. Given the esoteric nature of the subject matter, some familiarity with the platform is assumed, but the narrative never becomes too technical. A deep selection of file footage highlights the major events, the interviews are well-edited and relevant, and the interviewees are dynamic and engaging (particularly Workbench architect RJ Mical, who propels Viva Amiga‘s watch-ability up a few notches on sheer enthusiasm alone).

Viva Amiga: The Story of a Beautiful Machine (official website)

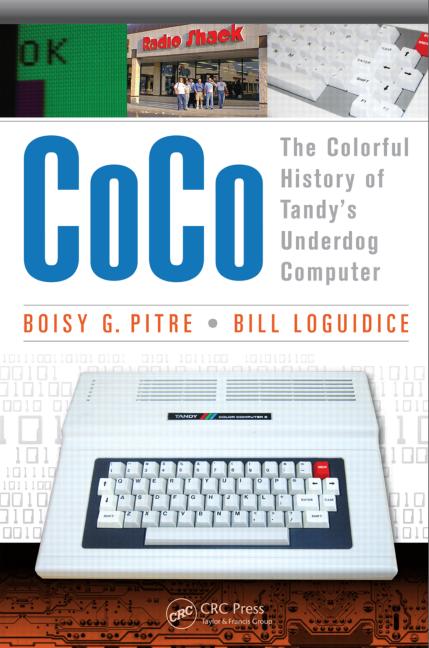

Book Review – CoCo: The Colorful History of Tandy’s Underdog Computer

I recently picked up a copy of “CoCo: The Colorful History of Tandy’s Underdog Computer”, written by Boisy Pitre and Bill Logudice, charting the evolution of Tandy’s TRS-80 Color Computer line. The book mainly covers a period from the inception of the value-oriented product line in 1980 through to the cancellation of the Tandy CoCo 3 in 1991. However, it also touches on Tandy’s beginnings as the Hinckley-Tandy Leather Company, it’s ownership of the Radio Shack chain through which the CoCo was sold, and the CoCo’s origins as the VIDEOTEX text terminal.

As the home computing market of the 1980s was characterized by fierce competition and a seemingly endless number of hardware models and platforms, I was happy to be going into this history with a bit of personal context. My first PC was a hand-me-down CoCo 1, received in 1994, and I have some fond memories of it’s unique look and feel. The computer was simple in appearance and operation for a novice, and I had no trouble picking up the nuances of Color BASIC, even if I may have spent more time fiddling with RF modulator to keep a consistent image on my old TV. With that being said, I was largely unaware of the unit’s backstory, and I was surprised to read how well-supported and influential the CoCo line would grow to be.

There’s a lot of information to be packed into less than 200 pages, and the book sometimes feels like more of a broad survey than a detailed history, especially when dealing with the significance of software releases for the line. However, Pitre and Logudice make a point of concisely covering all aspects of the unit’s impact on the computing scene of the time; be it the hardware design, Tandy’s notorious approach to cost-cutting, clones such as the Dragon 32, or the surging third-party developer community that sprung up as users began to flock to the platform. Additionally, the book includes a large selection of color images featuring marketing materials, advertisements, screenshots, and photos of the hardware and software.

I felt like this book worked especially well as a snapshot of the CoCo user community. Falsoft’s RAINBOW magazine was documented extensively, as was the rush of ill-fated user-conceived CoCo 3 successors following Tandy’s official discontinuation of the product line. As this sort of information relies on the memories and accounts of those involved, it can be especially fleeting compared to the more readily available details regarding Tandy’s side of the CoCo canon, and the authors’ efforts to preserve this history are appreciable.

Overall, I’m of the opinion that hardened CoCo veterans may find this effort a little more rewarding than more casual readers/PC history buffs. While there are many great ancedotes to be found throughout, I feel the story of the CoCo series tends to pale a little compared to its 80s contemporaries, lacking the sheer industry-wide impact of Apple or hubris of Commodore. Regardless, the book breezes by, and the vast amount of insight to be found relating to the machines’ development and marketing should still spark some interest in nostalgists and neophytes.

You can buy a copy of the book here.

Mistakes We All Make #2: One Man’s Trash..

A recent CBC news story detailing the recovery of a 30 gigabyte hard drive containing personally-identifiable information of military personnel at a local recycling depot compelled me to share a similar experience regarding improper disposal and sanitation of IT assets.

In my previous article, I described how I had begun to build a CCNA test lab to help in my studies, including a a few 2651XM routers that I purchased from a wholesaler via eBay. While exploring their file systems and experimenting with backup and restore through TFTP, I happened upon a curious text file in the root directory.

It became quickly apparent that this router was once owned by a major US telecommunications company and contained its share of information that the organization would likely prefer to keep private.

In this case, the evidence existed not in the unit’s startup config (which had likely been erased both by the telecom and the wholesaler prior to the router being re-sold), but in a file labelled with a .old extension, suggesting it had been used as a backup before a configuration change was made.

From this configuration, I could determine:

– The name and username of the user who edited the configuration file

– The hostname of the router.

– A long list of sub-domains associated with the company’s domain name.

– The IPs of the name servers being used.

– The fact that the user used TACACS+, the IPs of the TACACS+ servers, and the key being used.

– Telnet and console passwords, using level 7 encryption that was easily broken. To the admin’s credit, the passwords were technically strong in their use of a larger keyspace through upper-case/lower-case/numbers/symbols. However, they were very generic, and I would not be shocked if they had been re-used across network devices.

– The hostnames to which each port on the switch connected (left in the description of the interfaces) and the VLANs with which they were associated.

– The IPs of logging servers.

– A long list of permitted IPs in the unit’s ACL.

– SNMP community strings.

– The physical location of the router, as specified in the EXEC banner. (Cross-referencing this information through Google resulted in quite a few contact telephone numbers.)

A couple of years have passed since the router was decommissioned, but even if only one or two items in this list are still relevant, they clearly compromise the security of the network in question.

Nowadays, most users are aware that data can be recovered from a hard drive or SSD, and are careful to securely dispose of these components. However, non-volatile storage extends beyond the scope of these examples, and not everyone will make similar considerations when dealing with devices such as the aforementioned router.

Furthermore, even if the a component doesn’t contain any personal information or financial data, these details can form the basis of further attacks centered around social engineering, or tip off attackers to probable vulnerabilities. Poking around a switch or router isn’t likely to give an attacker any credit card numbers, but it’s an awfully strong first step toward doing so, if this is one’s intent.

It’s easy to find even more egregious examples in the consumer electronics realm. Consider how many cell phones get tossed to the curb without a second thought once their users accidentally smash the screen or kill the battery, and instead decide to move to the latest and greatest model. A Playstation or XBox found at a pawn shop might contain cached login credentials, friend lists, purchase histories, and saved network profiles. Wherever non-volatile storage exists, the potential for exploiting its contents exists as well. Even if this information is encrypted and seems secure at the moment, there’s no telling if it might become accessible in the future.

Of course, if you’ve been tasked with operating a computer in the last 20-30 years, this is likely a speech that you’ve heard many times before. However, this sort of advice need not be exclusively aimed at those who Post-It their login credentials to their monitor, or those whose password is “password”. As professional responsibility increases, so does the of the information that is handled, and everyone is well-served to pay mind to an occasional reminder of the consequences of lazy or short-sighted security policies.

Mistakes I Made #1: The Quest for Ping

Here’s one in a series of many stories of failure, frustration, and figuring things out.

I’ve been studying for Cisco’s CCNA certification recently and decided to purchase some older gear in order to set up a test lab, familiarize myself with IOS, and get used to the the physical wiring/configuration of these devices. Thus far, I’ve had some fun putting everything together, learned a lot in a short span of time, and I can still afford rent. My first router came in the mail the other day, so I figured I’d set up a basic layout in order to test its functionality.

The goal was simple enough: 2 hosts (a Windows 7 laptop and a Raspberry Pi running Raspbian), 2 2950 switches, and a 2651XM router in the middle. Set them up, ping back and forth.

Simple enough, right? If you answered “yes”, congratulations on being as arrogant as I was. This simple setup threw me for a loop, taking several hours to properly configure and causing me to question the hardware involved, basic routing and networking theory, and my sanity in general.

With a plan in place and success assumed, off I went. Console to each switch, config, console to the router, config. I assigned IPs to the router’s Ethernet ports, defined my routes. Interfaces are up, green LEDs everywhere, I’m good to go.

And then I ping, and then it fails. So I ping from the other PC, and it still fails. Hmm. Basic network troubleshooting skills kick in, and I start pinging out from the host to each successive link. At both sides, all goes well until pinging the router’s external interface, the point where the two networks connect and my packets fall off a cliff. This doesn’t make sense! Those interfaces are physically connected. How could the router be so stupid as to not know how to direct the requests? I check the routing table, all looks well. I run out of ideas and begin to gradually cycle between the stages of the Kübler-Ross model of grief.

To make this whole process a little easier, each PC also had a wireless interface connected to my home network, enabling me to SSH/TeamViewer in to either. This was my first mistake. As the host had no idea where the 192.168.3.x network was, it was sending the ping requests out of the wrong interface.

Previously, I had set up an IP for the management VLAN on each switch, which ended up being helpful in solving the last few pieces of the puzzle. I hopped back onto the switches and found that once I had the default gateways properly configured to the router’s connected interface, I could ping between the switches, crossing the router. OK, so the router’s good, switches can communicate, but pings from the hosts stop at the router’s internal interface, and pings from the switch to the host stop at the switch on the other side. Feeling defeated and figuring I’d probably be a networking philistine for the rest of my life, I decide to sleep on it. I wake up the next morning, the eureka moment hits, and I scramble back to the PCs.

I configure static routes from the PCs to the router’s internal interfaces for the networks needed.

Ping from the Windows PC: Great success!

Ping from the Linux PC: Great fail!

Wait, what?

I double-check the route, it looks OK. I’m perplexed. I start to wonder if the guys from “Windows Tech Support” that keep calling me can actually help out on this one.

Here’s my second mistake: I’d become so conditioned to pinging within the same subnet on Windows machines that I assumed it also worked the same way for pinging from an external network. This is not so. Windows Firewall blocks ICMP echo requests from external networks, which is required to ping from external hosts. This explains why I was able to ping from the adjacent switch and router, but not across the router’s interfaces.

Windows Firewall > Advanced Settings > New Inbound Rule > Custom Rule > Protocol Type > ICMPv4 > Any IP > NextNextNextNextNextNext problem solved. I am now a hero in my own mind.

What are the takeaways from this ordeal? A. Consider how the hosts will rout if multiple interfaces are configured and share a default gateway without any static routes. B. Consider how different operating systems react to traffic and requests. C. Question the obvious. D. Don’t get cocky.